Understanding the OMT Infrastructure

The Platform runs in the OPTIC Management Toolkit (OMT) infrastructure, which incorporates container management functions from Kubernetes. This containerized environment enables you to swiftly install and manage an integrated solution of ArcSight products in a single interface. The OMT has both an OMT Installer and a browser-based OMT Management Portal.

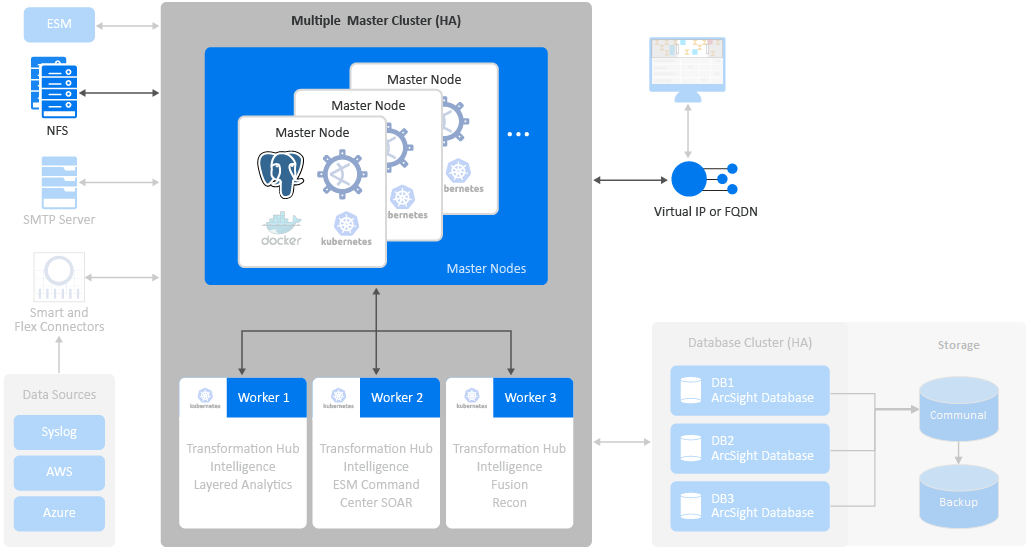

You will also need to install additional software and components to support your security solution. Your ArcSight environment might include the containerized capabilities, which are distributed across multiple host systems, plus servers for databases and the supporting products. The number of hosts you need depends on several factors, such as the need for high availability and the size of workloads based on events per second.

The OMT architecture requires several components:

- OMT Installer

- OMT Management Portal

- Kubernetes

- Master Nodes

- Network File System

- Worker Nodes

- Virtual IP Address

OMT Installer

Used for installing, configuring, and upgrading the OMT infrastructure. For more information about using the OMT Installer, see the following topics:

- Planning to Install and Deploy

- Choosing Your Installation Method

- Using ArcSight Platform Installer for an Automated On-premises Installation

- Installing the OMT Infrastructure

- Installing the OMT Infrastructure

- Configuring and Running the OMT Installer

- Reinstalling the Platform

OMT Management Portal

The Management Portal enables you to manage and reconfigure your deployed environment after the installation process is complete. You can add or remove deployed capabilities and worker nodes, as well as manage license keys.

During installation, you specify the credentials for the administrator of the Management Portal. This administrator is not the same as the admin user that you are prompted to create the first time that you log in to the Platform after installation. You'll use the Management Portal to upgrade the deployed capabilities with every Platform upgrade.

For more information about the OMT Management Portal, see the following topics:

Kubernetes

Kubernetes automates deployment, scaling, maintenance, and management of the containerized capabilities across the cluster of host systems. Applications running in Kubernetes are defined as pods, which group containerized components. Kubernetes clusters use Docker containers as the pod components. A pod consists of one or more containers that are guaranteed to be co-located on the host server and can share resources. Each pod in Kubernetes is assigned a unique IP address within the cluster, allowing applications to use ports without the risk of conflict.

Persistent services for a pod can be defined as a volume, such as a local disk directory or a network disk, and exposed by Kubernetes to the containers in the pod to use. A cluster relies on a Network File System (NFS) as its shared persistent storage. The clusters require master and worker nodes. For more information about the Platform pods, see Understanding Labels and Pods.

For more information about Kubernetes, see the following topics:

-

Installing kubectl (AWS)

Master Nodes

The master nodes control the Kubernetes cluster, manage the workload on the worker nodes, and direct communication across the system. You should deploy three master nodes to ensure high availability. However, you can use the Platform with a single master node.

For more information about master nodes, see the following topics:

Network File System

The Network File System (NFS) is a protocol for distributed file sharing. Some of the persistent data generated by Transformation Hub, Intelligence, and Fusion is stored in the NFS. This data includes component configuration data for ArcSight Platform-based capabilities unrelated to event data.

For more information about the Network File System, see the following topics:

Worker Nodes

Worker nodes run the application components and perform the work in the Kubernetes cluster. For all highly available configurations, we recommend deploying a minimum of three dedicated worker nodes.

You can add and remove worker nodes from the cluster as needed. Scaling the cluster to perform more work requires additional worker nodes, all of which are managed by the master nodes. The workload assigned to each node depends on the labels assigned to them during deployment or reconfiguration after deployment.

For more information about worker nodes, see the following topics:

Virtual IP Address

OMT supports high availability (HA) through load balancers and the Keepalived service. You can configure either external load balancers or Keepalived for high availability. If you have configured a virtual IP for a multi-master installation, the HA virtual IP address you defined bonds to one of the three master nodes. If a master node fails, the virtual IP address is assigned to an active master node. This setup helps to provide high availability for the cluster.

When you configure a connection to the cluster, configure the connection to use the virtual IP so that it benefits from the HA capability. One exception to this recommendation is when you are configuring a connection to Transformation Hub's Kafka, in which case you can achieve better performance by configuring the Kafka connection to connect directly to the list of worker nodes where Kafka is deployed.