13.3 Configuring an LVM Volume Group Cluster Resource with NSS Management Tools

This section describes how to use the NSSMU utility and NLVM commands to create a clustered LVM volume group and logical volume on a single SAN device that is assigned to all nodes in the cluster. The volume is exclusively mounted on only one node at a time. Clustered LVM manages the locks for the exclusive mount.

After you create the resource, you should view and modify the cluster resource settings and scripts before you bring the resource online. You can also add lines to its load script, unload script, and monitor script to customize the resource for other uses.

If you enable NCP when you create the LVM volume group in NSSMU or with nlvm commands, commands are automatically added to the resource scripts to mount, dismount, and monitor an NCP volume. The NCP volume is named the same as the LVM logical volume name, and all letters in the name are uppercase. An NCP Virtual Server object is created for the resource and commands are added to the scripts to bind or unbind it with the resource IP address.

13.3.1 Sample Values

The configuration scenarios use following sample values. Ensure that you replace the sample values with information for your configuration.

|

Parameter |

Sample Value |

|---|---|

|

Device name for the shared SAN device |

/dev/sdf The device is initialized and contains no partitions. It is not enabled as shareable for clustering. |

|

Volume group name |

vol44 By default, NSSMU uses the logical volume name as the LVM volume group name. If you use the NLVM create linux volume command to create the LVM volume group cluster resource, you can specify a different name for the volume group, such as vg44. |

|

Volume name |

vol44 If you enable NCP for the volume, this name must comply with the Naming Conventions for NCP Volumes. Lowercase letters are automatically changed to uppercase for the NCP volume name. |

|

NCP volume name |

VOL44 The NCP volume is created only if you enable NCP as you create the clustered LVM volume by using NSSMU or the NLVM create linux volume command. The mount point path for the NCP volume is the mount point for the LVM volume. This means that the NCP share is created at the root of the mounted LVM volume when the LVM resource is online. |

|

Linux POSIX file system type |

ext3 Valid values are btrfs (requires the btrfsprogs package), ext2, ext3, reiserfs, and xfs. |

|

Make options for the file system |

None (do not specify a value). Press Enter to continue. For a list of the supported file system options for the file system type you are making, see the mkfs(8) man page and the man page for the specific file system: mkfs.btrfs(8), mkfs.ext2(8), mkfs.ext3(8), mkfs.reiserfs(8), or mkfs.xfs(8). IMPORTANT:The file system creation fails if you specify a make option that is not supported by the file system type. |

|

Mount options |

rw The Read/Write (rw) option is specified by default. For a list of available options that work with the file system type you are using, see the mount(8) man page. |

|

Volume size |

100 GB A 100 GB LUN is prepared in the shared storage subsystem. It is attached to the nodes in the cluster. The device must be initialized and contain no partitions. It should not be marked as Shareable for clustering. You are not prompted to enter a volume size. The clustered LVM volume group and logical volume use the entire device. When you select the device, all of the device’s free available space is displayed in the Free Size field. |

|

Resource IP address |

10.10.10.44 This is the IP address of the virtual server for the cluster resource. The address must be unique and in the same subnet as the cluster’s IP address. Specify the IP address in IPv4 format. |

|

Mount device |

/dev/vol44/vol44 The mount device path format is /dev/<volume_group_name>/<logical_volume_name> If you specify a different name for the volume group, such as vg44, by using the NLVM create linux volume command, the mount device path is /dev/vg44/vol44. |

|

Mount point path |

/mnt/vol44 You can use any valid Linux path as the mount point. The default mount point location for LVM logical volumes created by NSSMU and NLVM is /usr/novell/<lx_volume_name>. NSSMU automatically creates the mount point path if it does not exist on this node. However, you must manually create the mount point on each of the other nodes in the cluster. If the path does not exist on a node when you fail over the resource to it, the resource goes comatose You can alternatively add the following line to the load script before the Linux file system mount command to create the path on a node if it does not exist: ignore_error mkdir -p $MOUNT_PATH |

13.3.2 Creating an LVM Volume Group Cluster Resource with NSSMU

This section describes how to use NSSMU to create and cluster-enable an LVM volume group. NSSMU automatically uses the same script format as the Generic File System template (Generic_FS_Template) to create an LVM volume group cluster resource.

-

Ensure that the SAN device is attached to all of the nodes in the cluster.

-

Log in as the root user to the master node of the cluster, then open a terminal console.

-

If you have not already done so, initialize the SAN device that you want to use for the LVM volume group.

LVM allows only one device per volume group. See Section 13.2, Initializing a SAN Device.

-

Launch NSSMU:

nssmu

-

In the NSSMU main menu, select Linux Volumes, then press Enter.

-

On the Linux Volumes page, press Insert to launch the volume creation wizard, then enter the following information as you are prompted for it:

Parameter

Action

Select LVM type

Select Cluster Enabled LVM2 Volume, then press Enter.

NCP Enable volume?

Specify whether to enable the LVM logical volume for NCP file access. Press y (yes) to allow NCP file access, or press n (No) to disallow NCP file access at this time.

If you enable NCP, NSSMU automatically adds commands to the resource scripts that mount, dismount, and monitor an NCP volume. The NCP volume is named the same as the LVM logical volume name, and all letters in the name are uppercase. The tools automatically create an NCP Virtual Server object for the resource.

If you do not enable NCP at this time, you can manually create an NCP virtual server, create an NCP Volume object, and add NCP commands to the scripts. See Section 13.5, Creating a Virtual Server Object for an LVM Volume Group Cluster Resource.

Enter volume name

Type the name for the LVM logical volume , then press Enter.

The specified name is also used for the LVM volume group, which is used in the name of the LVM volume group cluster resource.

If you enable NCP for the volume, the specified name is also applied to the NCP volume. An NCP volume name can be up to 14 alphanumeric characters. Underscores are allowed. All letters in the LVM volume name are uppercase for the NCP volume name. For example, if the LVM volume name is vol44, the NCP volume name is VOL44.

Enter volume IP address

Type the IP address to use for the LVM cluster resource in IPv4 format (such as 10.10.10.44), then press Enter.

Select Volume type

Select one of the following the Linux POSIX file systems, then press Enter:

-

btrfs

This option is displayed only if the btrfsprogs package is installed.

-

ext2

-

ext3

-

resiserfs

-

xfs

Enter full mount point path

Type the full mount point path for the LVM logical volume (such as /mnt/vol44), then press Enter.

The default path is /usr/novell/<lx_volume_name>, such as /usr/novell/vol44.

If NCP is enabled, the specified path is also used as the mount point path for the NCP volume.

Enter any make options

Press Enter to continue without specifying options, or specify the desired make options for the file system type you are making, then press Enter.

For a list of the supported file system options for the file system type you are making, see the mkfs(8) man page and the man page for the specific file system: mkfs.btrfs(8), mkfs.ext2(8), mkfs.ext3(8), mkfs.reiserfs(8), or mkfs.xfs(8).

IMPORTANT:The file system creation fails if you specify a make option that is not supported by the specified file system type.

Enter any mount options

Press Enter to accept the default read and write options (rw).

The Read/Write (rw) option is specified by default. You can add other mount options, or modify this option. For a list of available options that work with the specified file system type, see the mount(8) man page.

Device

From the list of available devices, select the device that you initialized in Section 13.2, Initializing a SAN Device, then press Insert or Enter to select the device.

The LVM volume group requires the entire device. You cannot specify the amount of space to use. The device’s free available space is displayed in the Free Size field.

-

-

Press F3 to accept the setup you entered for the volume group cluster resource.

The resource is created and brought online on the node where you created it. The resource is named <volume_group_name>_resource. In the example, the name of the volume group is the same as the logical volume, so the resource name is vol44_resource.

Typically, the volume creation takes less than 10 seconds. However, if you have a large tree or if the server does not hold an eDirectory replica, the create time can take up to 3 minutes.

-

In the Linux Volumes list, select the newly created volume and view information about it.

Parameter

Description

Device

Specifies the full device node path for the LVM logical volume.

Example: /dev/vol44/vol44

Mount Point

When the resource is online, this specifies the path on the root file system where this volume is mounted.

Example: /mnt/vol44

Mount options

When the resource is online, this specifies the mount options that are applied whenever this volume is automatically mounted after a reboot.

Example: rw

Type

When the resource is online, this specifies the file system type.

Examples: btrfs, ext2, ext3, reiserfs, xfs

Size

Specifies the amount of space reserved for this volume.

Example: 99.58 GB

Mounted

Specifies whether the volume is mounted or unmounted. When the resource is brought online, the load script mounts the logical volume.

Value: Yes or No

State

Specifies the availability for the file system.

Example: Read/Write

LVM

Specifies whether the specified volume is an LVM logical volume.

Value: Yes

NCP

Specifies whether the LVM logical volume is enabled for NCP.

The NCP state cannot be determined for a clustered LVM volume. You can use the Clusters plug-in for iManager to determine if there are NCP commands in the load script. On the Cluster Options page, select the resource to view its properties, then click the Scripts tab.

Share State

Specifies whether the LVM logical volume is cluster enabled for a OES Cluster Services cluster.

Value: Shareable for Clustering

When an LVM logical volume group is clustered, CLVM manages the share state, not the device. NSSMU reports the device as Not Shareable for Clustering. NSSMU reports the clustered LVM volume is Shareable for Clustering.

-

Press Escape twice to exit NSSMU.

-

Continue with Section 13.3.4, Configuring the LVM Cluster Resource Settings.

13.3.3 Creating an LVM Volume Group Cluster Resource with NLVM Commands

This section describes how to use NLVM commands to create and cluster-enable an LVM volume group. NLVM automatically uses the same script format as the Generic File System template (Generic_FS_Template) to create an LVM volume group cluster resource. The NLVM command allows you to specify a group name that is different than the volume name.

-

Ensure that the SAN device is attached to all of the nodes in the cluster.

-

Log in to the master node of the cluster as the root user, then open a terminal console.

-

If you have not already done so, initialize the SAN device that you want to use for the LVM volume group.

-

Create a clustered LVM volume group and logical volume. At the command prompt, enter the following (all on the same line, of course):

nlvm [nlvm_options] create linux volume type=<btrfs|ext2|ext3|reiserfs|xfs> device=<device_name> [mp=</mount_path>] [mkopt=<option1[,option2,...]>] [mntopt=<option1[option2[]...]>] lvm name=<lvm_volume_name> [group=<lvm_group_name>] shared ip=<IP_address_for_LVM_volgroup_cluster_resource> [ncp]For details about using this command, see

Create Linux Volume

in the OES 2023: NLVM Reference.Parameters and Options

- type=<btrfs|ext2|ext3|reiserfs|xfs>

-

You must specify the type of file system to use for the volume. Btrfs requires that the btrfsprogs package is installed on all nodes. Supported file systems are btrfs, ext2, ext3, reiserfs, and xfs.

- device=<device_name>

-

You must specify the device to use. Replace device_name with the device node name of the device that you want to use for the volume, such as sdf. The entire device is used for the LVM volume group. You cannot specify a size to use. The device must already be initialized, contain no partitions, and must not be marked as shareable for clustering.

- [mp=</mount_path>]

-

If a mount point path is not specified, the utility assigns a default mount path of /usr/novell/<volume_name>. Use the mp option to specify a custom mount point. Replace mount_path with the full Linux path of the mount point where the volume is to be mounted. The final directory name in the path can be the same or different than the specified volume name. If the path does not currently exist, it is automatically created on that node. You must manually create the path on other nodes in the cluster.

- [mkopt=<option1[,option2,...]>]

-

You can use the mkopt option to specify the options to use when running mkfs. For a list of available options, see the mkfs(8) man page. No default option is specified. For a list of the supported file system options for the file system type you are making, see the mkfs(8) man page and the man page for the specific file system: mkfs.btrfs(8), mkfs.ext2(8), mkfs.ext3(8), mkfs.reiserfs(8), or mkfs.xfs(8).

IMPORTANT:The file system creation fails if you specify a make option that is not supported by the file system type.

- [mntopt=<option1[option2[]...]>]

-

You can use the mntopt option to specify the options to use when mounting the volume. For a list of available options, see the mount(8) man page. The default mntopt value is rw (Read/Write).

- lvm

-

You must specify the lvm option to create an LVM volume group and logical volume. Use this option with the name option.

- name=<lvm_volume_name>

-

Replace lvm_volume_name with a name for the LVM volume. If you do not specify the group option, this name is also used as the LVM volume group name, which is used in the cluster resource name. For LVM logical volume naming conventions, see

Linux LVM Volume Group and Logical Volume Names

in the OES 2023: NLVM Reference.If you use the NCP option, the NCP volume name uses the same name as the LVM logical volume name, but all letters are uppercase. NCP volume names can be up to 14 alphanumeric characters, and underscores are allowed.

- [group=<lvm_group_name>]

-

Replace lvm_volume_group_name with a name for the LVM volume group. The group name is also used in the cluster resource name. If you do not specify a volume group name, the group is automatically named the same as the LVM volume.

- shared

-

You must specify the shared option to create a clustered LVM volume group and logical volume.

- ip=<IP_address_for_LVM_volgroup_cluster_resource>

-

Replace IP_address_for_LVM_volgroup_cluster_resource with a static unique IP address to use for the LVM cluster resource. Specify the address in IPv4 format.

- ncp

-

Specify the ncp option to NCP enable the LVM logical volume for NCP file access.

-

If you enable NCP, NSSMU automatically adds commands to the resource scripts that mount, dismount, and monitor an NCP volume. The NCP volume is named the same as the LVM logical volume name, and all letters in the name are uppercase. The tools automatically create an NCP Virtual Server object for the resource.

-

If you do not enable NCP at this time, you can manually create a virtual server and add NCP file access later. See Section 13.5, Creating a Virtual Server Object for an LVM Volume Group Cluster Resource.

-

Example For example, at the command prompt, enter the following (all on the same line):

nlvm create linux volume type=ext3 device=sdf mp=/mnt/vol44 mntopt=rw lvm name=vol44 group=vg44 shared ip=10.10.10.44 ncpIf the command is successful, the response is

Linux clustered volume vol44 created.

Typically, the volume creation takes less than 10 seconds. However, if you have a large tree or if the server does not hold an eDirectory replica, the create time can take up to 3 minutes.

-

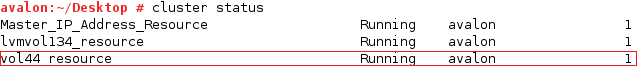

Verify that the cluster resource was created and brought online by entering

cluster status

The resource is named <lv_name>_resource. In the following example, vol44_resource is in the Running state.

-

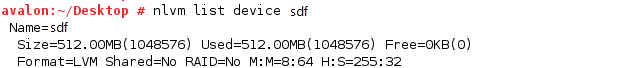

View the device information to see that the device shows the LVM format. At the command prompt, enter

nlvm list device <device_name>Replace device_name with the name of the device that you used, such as sdf.

The command shows that the LVM container uses the entire device; the size and used space values are the same and the free space shows no space. The partitioning format is LVM.

For example, enter

nlvm list device sdf

-

Continue with Section 13.3.4, Configuring the LVM Cluster Resource Settings.

13.3.4 Configuring the LVM Cluster Resource Settings

After you create a clustered LVM volume group and logical volume, use the procedure in this section to verify that the LVM volume group cluster resource was created and is online. You can customize the resource policies, monitoring, and preferred nodes settings.

-

Open iManager in a web browser, then log in as a cluster administrator user.

-

In Roles and Tasks, select Clusters > My Clusters.

-

Select the cluster where you created the volume group cluster resource.

If the cluster you want to manage is not in the list, you can add it. Click Add, browse to locate and select the Cluster object of the cluster, then click OK.

-

In the list of resources, locate the new resource and notice the state of the resource. It should be online and running.

-

Click the resource’s name link to open its Properties page.

You can alternatively go to the Cluster Options page, then click the resource’s name link, or select the check box next to the resource and click Details.

-

On the Resource Policies page, view and modify the resource’s Policy settings if needed. Click Apply if you make changes.

-

(Optional) Select the Resource Follows Master check box if you want to ensure that the resource runs only on the master node in the cluster.

If the master node in the cluster fails, the resource fails over to the node that becomes the master.

-

(Optional) Select the Ignore Quorum check box if you don’t want the cluster-wide timeout period and node number limit enforced.

The quorum default values were set when you installed OES Cluster Services. You can change the quorum default values by accessing the properties page for the Cluster object.

Selecting this box ensures that the resource is launched immediately on any server in the Preferred Nodes list as soon as any server in the list is brought online.

-

By default, the Generic File System resource template sets the Start mode and Failover mode to Auto and the Failback Mode to Disable. You can change the default settings as needed.

-

Start Mode: If the Start mode is set to Auto, the resource automatically loads on a designated server when the cluster is first brought up. If the Start mode is set to Manual, you can manually start the resource on a specific server when you want, instead of having it automatically start when servers in the cluster are brought up.

-

Failover Mode: If the Failover mode is set to Auto, the resource automatically moves to the next server in the Preferred Nodes list if there is a hardware or software failure. If the Failover mode is set to Manual, you can intervene after a failure occurs and before the resource is started on another node.

-

Failback Mode: If the Failback mode is set to Disable, the resource continues running on the node it has failed to. If the Failback mode is set to Auto, the resource automatically moves back to its preferred node when the preferred node is brought back online. Set the Failback mode to Manual to prevent the resource from moving back to its preferred node when that node is brought back online, until you are ready to allow it to happen.

-

-

-

Enable and configure monitoring for the resource, then click Apply.

See Section 10.7, Enabling Monitoring and Configuring the Monitor Script.

-

In the Properties page, select the Monitoring tab.

-

Select the Enable Resource Monitoring check box to enable resource monitoring for the selected resource.

Resource monitoring is disabled by default.

-

Specify the Polling Interval to control how often you want the resource monitor script for this resource to run.

You can specify the value in minutes or seconds. See Polling Interval.

-

Specify the number of failures (Maximum Local Failures) for the specified amount of time (Time Interval).

See Failure Rate.

-

Specify the Failover Action by indicating whether you want the resource to be set to a comatose state, to migrate to another server, or to reboot the hosting node (without synchronizing or unmounting the disks) if a failure action initiates. The reboot option is normally used only for a mission-critical cluster resource that must remain available.

See Failure Action.

-

-

Click the Preferred Nodes tab, assign preferred nodes for the resource by moving them from the Unassigned list to the Assigned list, then click Apply.

When you configure a volume group cluster resource with NSSMU or with NLVM commands, the node where you create it is automatically assigned as a preferred node for the resource.

IMPORTANT:For Assigned nodes, ensure that you prepare the node for the services in the resource before you migrate or fail over the resource to it.

When you bring a resource online, it is automatically loaded on the most preferred node in the list. If the node is not available, the other nodes are tried in the order that they appear in the list. You can modify the order of the nodes by clicking the Edit (pen) icon to open the list in a text editor. In the editor, click OK to close the editor, then click Apply to save your changes.

-

At the bottom of the page, click OK to close the Properties page and save your changes.

The changes do not take effect until the resource is taken offline and brought online again.

-

Continue with Section 13.3.5, Viewing or Modifying the LVM Resource Scripts.

13.3.5 Viewing or Modifying the LVM Resource Scripts

You can customize the scripts by adding lines for other products that use a shared LVM volume group resource. Compare the generic script with the templates for those products to identify what lines need to be added or modified.

-

In iManager, select Clusters > My Clusters.

-

Select the cluster where you created the volume group cluster resource.

-

Select the cluster resource’s name link to open the Properties page, then click the Scripts tab.

The Scripts tab automatically opens to the load script.

-

On the Load Script page, view or modify the load script. Click Apply if you make changes.

Ensure that all letters in the NCP volume name are uppercase letters A to Z. See the Sample LVM Resource Load Scripts Created by NSS Management Tools.

-

Click the Unload Script link to view or modify the unload script. Click Apply if you make changes.

Ensure that all letters in the NCP volume name are uppercase letters A to Z. See the Sample LVM Resource Unload Scripts Created by NSS Management Tools.

-

Click the Monitor Script link to view or modify the monitor script. Click Apply if you make changes.

Ensure that all letters in the NCP volume name are uppercase letters A to Z. See the Sample LVM Resource Monitor Scripts Created by NSS Management Tools.

-

At the bottom of the page, click OK to close the Properties page and save your changes.

The changes do not take effect until the resource is taken offline and brought online again.

-

If you modified the settings on any of the pages, you must take the resource offline and bring it online in order for the changes to take effect.

-

In Roles and Tasks, select Clusters > My Clusters.

-

On the Cluster Manager page, select the check box next to the resource, then click Offline.

Wait for the status to report that it is offline, then continue.

-

Select the check box next to the resource, then click Online.

-

Verify that the resource comes online and reports a Running state.

If the resource goes into a Comatose state, it is probably because you made a mistake in the lines you added or modified in the scripts. Take the resource offline, then go back to correct the scripts, and try to bring it online again.

-

13.3.6 Sample LVM Resource Scripts

The sample LVM resource scripts in this section are automatically generated for an LVM volume group cluster resource when you use NLVM or NSSMU to create a clustered LVM2 volume, as described in Section 13.3, Configuring an LVM Volume Group Cluster Resource with NSS Management Tools. See Section 13.3.1, Sample Values for information about the sample values used in these scripts. Ensure that you replace sample values with those for your own system.

Sample LVM Resource Load Scripts Created by NSS Management Tools

Compare the load scripts in this section to identify the lines that are added when you enable the LVM logical volume for NCP file access:

Without NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name (nssmu uses volume name for group name) VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # activate the volume group exit_on_error vgchange -a ey $VOLGROUP_NAME # create the mount point if it does not exist on the node ignore_error mkdir -p $MOUNT_POINT # mount the file system exit_on_error mount_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # add the IP address exit_on_error add_secondary_ipaddress $RESOURCE_IP exit 0

With NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name (nssmu uses volume name for group name) VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # define NCP server name NCP_SERVER=clus1-vol44-SERVER # define NCP volume name NCP_VOLUME=VOL44 # activate the volume group exit_on_error vgchange -a ey $VOLGROUP_NAME # create the mount point if it does not exist on the node ignore_error mkdir -p $MOUNT_POINT # mount the file system exit_on_error mount_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # mount the NCP volume exit_on_error ncpcon mount $NCP_VOLUME=251,PATH=$MOUNT_POINT # add the IP address exit_on_error add_secondary_ipaddress $RESOURCE_IP # bind the NCP virtual server to the resource IP address exit_on_error ncpcon bind --ncpservername=$NCP_SERVER --ipaddress=$RESOURCE_IP exit 0

Sample LVM Resource Unload Scripts Created by NSS Management Tools

Compare the unload scripts in this section to identify the lines that are added when you enable the LVM logical volume for NCP file access:

Without NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name (nssmu uses volume name for group name) VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # del the IP address ignore_error del_secondary_ipaddress $RESOURCE_IP # unmount the volume sleep 10 # if not using SMS for backup, please comment out this line exit_on_error umount_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # deactivate the volume group exit_on_error vgchange -a n $VOLGROUP_NAME exit 0

With NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name (nssmu uses volume name for group name) VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # define NCP server name NCP_SERVER=clus1-vol44-SERVER # define NCP volume name NCP_VOLUME=VOL44 # unbind the NCP virtual server from the resource IP address ignore_error ncpcon unbind --ncpservername=$NCP_SERVER --ipaddress=$RESOURCE_IP # del the IP address ignore_error del_secondary_ipaddress $RESOURCE_IP # dismount the NCP volume ignore_error ncpcon dismount $NCP_VOLUME # unmount the volume sleep 10 # if not using SMS for backup, please comment out this line exit_on_error umount_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # deactivate the volume group exit_on_error vgchange -a n $VOLGROUP_NAME exit 0

Sample LVM Resource Monitor Scripts Created by NSS Management Tools

Compare the monitor scripts in this section to identify the lines that are added when you enable the LVM logical volume for NCP file access. To use the script, you must also enable monitoring for the resource. See Section 10.7, Enabling Monitoring and Configuring the Monitor Script.

Without NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # check the logical volume exit_on_error status_lv $MOUNT_DEV # test the file system exit_on_error status_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # status the IP address exit_on_error status_secondary_ipaddress $RESOURCE_IP exit 0

With NCP File Access

#!/bin/bash . /opt/novell/ncs/lib/ncsfuncs # define the IP address RESOURCE_IP=10.10.10.44 # define the file system type MOUNT_FS=ext3 # define the volume group name VOLGROUP_NAME=vol44 # define the device MOUNT_DEV=/dev/$VOLGROUP_NAME/vol44 # define the mount point MOUNT_POINT=/mnt/vol44 # define NCP server name NCP_SERVER=clus1-vol44-SERVER # define NCP volume name NCP_VOLUME=VOL44 # check the LVM logical volume exit_on_error status_lv $MOUNT_DEV # check the NCP volume exit_on_error ncpcon volume $NCP_VOLUME # check the file system exit_on_error status_fs $MOUNT_DEV $MOUNT_POINT $MOUNT_FS # status the IP address exit_on_error status_secondary_ipaddress $RESOURCE_IP exit 0